A number of us are using a private site where we run an application offline. The application offers a download button so that we can take a snapshot of our offline progress, a sort of backup. Put the blob directly in an byte array, and use the bytearray in the request stream. Sample: // Tempblob is containing the image or document or whatever // ByteArray = System.Array from 'mscorlib' // first fill the byte array TempBlob.Blob.CREATEINSTREAM(InStream); MemoryStream:= MemoryStream.MemoryStream; COPYSTREAM( MemoryStream, InStream); ByteArray:= MemoryStream.ToArray; BytesRead.

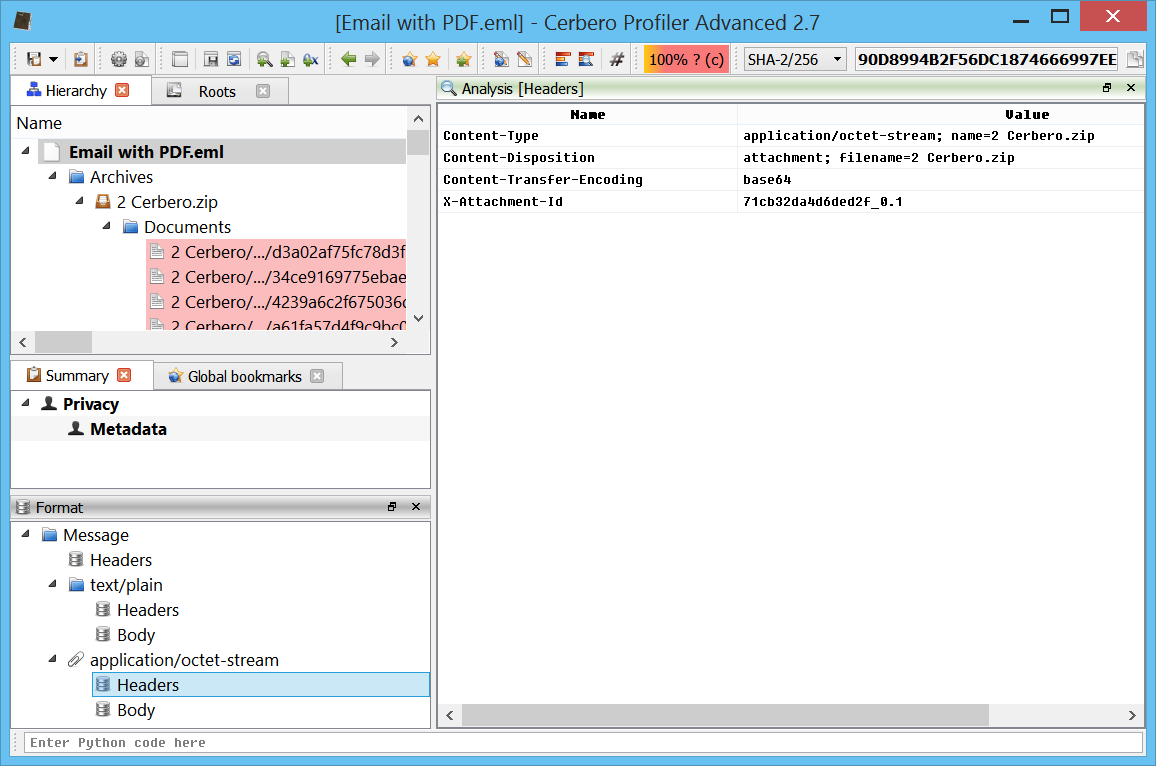

A MIME attachment with the content type 'application/octet-stream' is a binary file. Typically, it will be an application or a document that must be opened in an application, such as a spreadsheet or word processor. If the attachment has a filename extension associated with it, you may be able to tell what kind of file it is. A .exe extension, for example, indicates it is a Windows or DOS program (executable), while a file ending in .doc is probably meant to be opened in Microsoft Word.

No matter what kind of file it is, an application/octet-stream attachment is rarely viewable in an email or web client. If you are using a workstation-based client, such as Thunderbird or Outlook, the application should be able to extract and download the attachment automatically. After downloading an attachment through any of these methods, you must then open the attachment in the appropriate application to view its contents.

Before opening attachments, even from trusted senders, you should follow the guidelines listed in Tips for staying safe online.

In addition to the generic application/octet-stream content type, you may also encounter attachments that have different subtypes (for example, application/postscript, application/x-macbinary, and application-msword). They are similar to application/octet-stream, but apply to specific kinds of files.

23 November 2017by

Summary

Handling data from Azure Storage blobs is not straightforward. The return value is binary (application/octet-stream) at first and needs to be casted into a data type you want to process; in our case into application/json.

This write-up is an easy to follow and real walk through of errors beginners may encounter handling Azure Storage blobs in Azure Logic Apps. It has happened to me.

Requirements

As soon a new file (blob) in an Azure Storage container arrives this file should be processed by an Azure Function app.

Preparing the Playground

1) Create a new Azure Storage Account.

2) Create a new container in the storage account.

3) Create a new Azure Logic App.

4) Design the Logic App.

Challenges

A first draft could look like this.

With this configuration we have three steps.

- When one or more blobs are added or modified (metadata only) (Preview)

- Created a connection to the newly created storage account.

- Configured to look in the container

files. - Configured to look for changes every 10 seconds.

- Get blob content

- Gets the content of a blob by ID. (There is a similar action called 'Get blob content using path' if you need to get blob contents via paths.)

- Azure Function

- We call an Azure Function with the content of the blob.

Unfortunately, this configuration does not work because of two errors:

- there is no array of blobs

- data type issues

Trigger & Test the Logic App

I use the Microsoft Azure Storage Explorer to upload files into the container of my Azure Storage account to trigger my Azure Logic App. In every try I increment the number of my test file. The test file contains a simple JSON which can be interpreted by my Azure Function.

After each upload I go back into the Azure Portal to look for new trigger events and shortly afterwards for new run events. To avoid flooding of my trigger history I disable the logic app after each upload to inspect the run results. Before each new try I enable the logic app again.

As you can see our first configuration of the Azure Logic App did not run successfully. Let's inspect the first error!

Error 1: No Array

The return value of the first step is no array! If we look at the raw data, we see in the body that there is no array. Maybe this is an exception because we uploaded only a single file? Try again with two files at the same time.

Now, I upload two files at the same time. How to unlock itel phones.

Surprisingly, the Logic App gets triggered for each new file separately.

So my assumption was wrong that the action When one or more blobs are added or modified (metadata only) (Preview) returns an array. For me the property Number of blobs was somewhat misleading that the action would return an array.

We can resolve this error easily by removing the for-each-loop. We can design the flow of the Logic App in such a way that the Logic App gets triggered for each new or modified blob separately.

Let's try again by uploading a new file. Again, we see in the run history an error. This time the error is at the third step. The first two steps are running successfully, now. We were successful in getting the content of the blob which has triggered the Logic App. So far, so good! Let's explore the new error!

Even the raw data of the 2nd step looks fine.

Error 2: Data Type Issues

The Azure Function action in the third step throws the error UnsupportedMediaType with the message: 'The WebHook request must contain an entity body formatted as JSON.' That error may be confusing at first because our file contains pure JSON data. A look at the content type reveals that the Logic App does not know that we handle JSON data, instead it says something of application/octet-stream, which is a binary data type.

The Azure Function gets the following raw input:

The raw output of the Azure Function action looks like this.

And for reference the function stub of the Azure Function looks like this:

Convert into JSON data type

The documentation states, that Logic Apps can handle natively application/json and text/plain (see Handle content types in logic apps). As we have already JSON data we can use the function @json() to cast the data type to application/json.

Unfortunately, this approach cannot be saved by the Logic App Designer.

Challenges

A first draft could look like this.

With this configuration we have three steps.

- When one or more blobs are added or modified (metadata only) (Preview)

- Created a connection to the newly created storage account.

- Configured to look in the container

files. - Configured to look for changes every 10 seconds.

- Get blob content

- Gets the content of a blob by ID. (There is a similar action called 'Get blob content using path' if you need to get blob contents via paths.)

- Azure Function

- We call an Azure Function with the content of the blob.

Unfortunately, this configuration does not work because of two errors:

- there is no array of blobs

- data type issues

Trigger & Test the Logic App

I use the Microsoft Azure Storage Explorer to upload files into the container of my Azure Storage account to trigger my Azure Logic App. In every try I increment the number of my test file. The test file contains a simple JSON which can be interpreted by my Azure Function.

After each upload I go back into the Azure Portal to look for new trigger events and shortly afterwards for new run events. To avoid flooding of my trigger history I disable the logic app after each upload to inspect the run results. Before each new try I enable the logic app again.

As you can see our first configuration of the Azure Logic App did not run successfully. Let's inspect the first error!

Error 1: No Array

The return value of the first step is no array! If we look at the raw data, we see in the body that there is no array. Maybe this is an exception because we uploaded only a single file? Try again with two files at the same time.

Now, I upload two files at the same time. How to unlock itel phones.

Surprisingly, the Logic App gets triggered for each new file separately.

So my assumption was wrong that the action When one or more blobs are added or modified (metadata only) (Preview) returns an array. For me the property Number of blobs was somewhat misleading that the action would return an array.

We can resolve this error easily by removing the for-each-loop. We can design the flow of the Logic App in such a way that the Logic App gets triggered for each new or modified blob separately.

Let's try again by uploading a new file. Again, we see in the run history an error. This time the error is at the third step. The first two steps are running successfully, now. We were successful in getting the content of the blob which has triggered the Logic App. So far, so good! Let's explore the new error!

Even the raw data of the 2nd step looks fine.

Error 2: Data Type Issues

The Azure Function action in the third step throws the error UnsupportedMediaType with the message: 'The WebHook request must contain an entity body formatted as JSON.' That error may be confusing at first because our file contains pure JSON data. A look at the content type reveals that the Logic App does not know that we handle JSON data, instead it says something of application/octet-stream, which is a binary data type.

The Azure Function gets the following raw input:

The raw output of the Azure Function action looks like this.

And for reference the function stub of the Azure Function looks like this:

Convert into JSON data type

The documentation states, that Logic Apps can handle natively application/json and text/plain (see Handle content types in logic apps). As we have already JSON data we can use the function @json() to cast the data type to application/json.

Unfortunately, this approach cannot be saved by the Logic App Designer.

Error message

Save logic app failedFailed to save logic app logicapp. The template validation failed: ‘The template action ‘ProcessBlob2' at line ‘1' and column ‘43845' is not valid: 'The template language expression ‘json(@{body(‘Get_blob_content')})' is not valid: the string character ‘@' at position ‘5' is not expected.'.'.

Fortunately, this only a small shortcoming of the Azure Logic App Designer. We need to look at the configuration in code view. For that reason click on the tree dots .. in the upper right corner of the Azure Function action and select Peek code in the menu.

We have to change the evaluation in the body property. It must not contain more than one expression wrapper @(). The documentation does not say explicitly how to nest expressions (see https://docs.microsoft.com/en-us/azure/logic-apps/logic-apps-workflow-definition-language#expressions), but after some trial and error, we know, we just need to remove the nested expression wrapper @{and } and leave everything else.

Check again, if it's working. Upload a new file.

We check the run history, again, and all actions did run successfully. Let's check the raw input and output.

Raw input:

Raw output:

There is a difference in the input data of the Azure Function action, as there is no explicit content type, just pure JSON data.

Final Logic App

The final Logic App looks like this in the designer. Unfortunately, you don't see all expressions. You need to peek inside the code, as seen in the step before.

Binary Octet Stream

To see everything switch to code view. That's not nice to design, but it's good enough to check our configuration.

What Is Application/octet-stream Java

Helpful Links

Azure Logic Apps

Azure Function Apps

What Is Application/octet-stream

- https://stackoverflow.com/questions/10399324/where-is-httpcontent-readasasync#=